City Photogrammetry & Auto Masking (Python)

Introduction

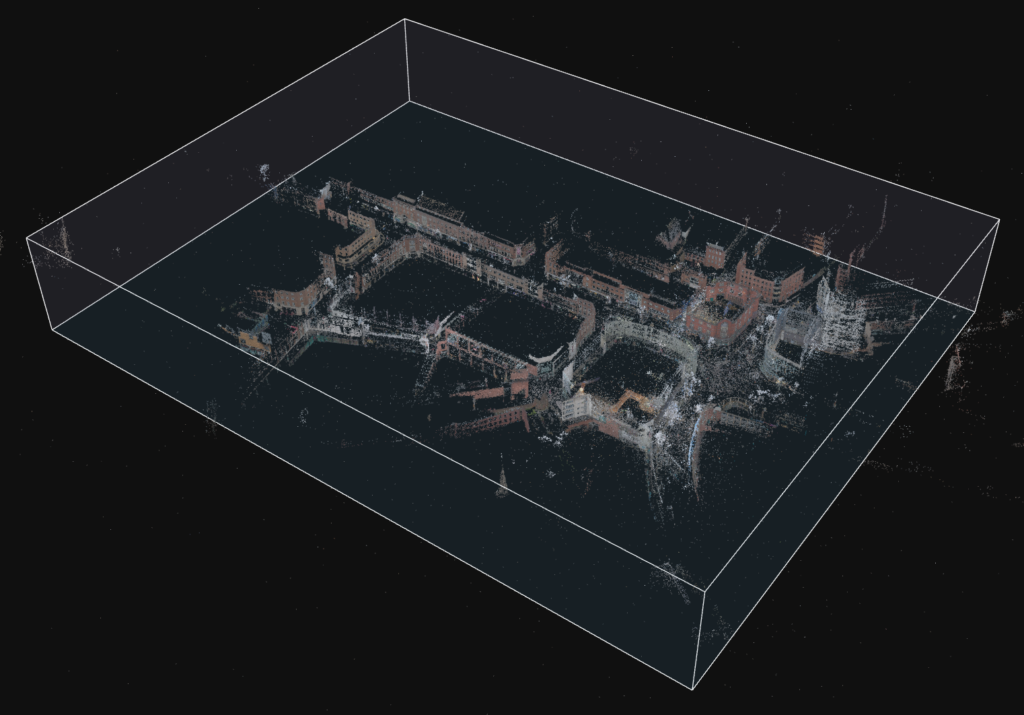

The city of Exeter has a rich history, with layers of past urban development hidden beneath its modern streets. My latest project aims to capture both the visible city center and its underground passages using photogrammetry. While the main project is to capture the Underground Passages Exeter, it is also important to capture the city center as to precisley locate the passages underneath it. This ongoing work will contribute to reconstruction, documentation, AR/VR applications, cross-section surveys, and more. In this post, I will discuss my current progress and introduce a Python script that automates the masking of people and cars in photogrammetry datasets. I do not want people and cars as most of them are moving between the images and therefore introduce unwanted objects

Capturing the City Center

Photographing a busy urban environment presents unique challenges. Streets are constantly filled with people and vehicles, making it difficult to obtain clean datasets for 3D reconstruction. To mitigate this issue, I have been experimenting with different capture techniques, including:

- Shooting during early mornings or off-peak hours

Taking multiple images from the same viewpoint to later blend out moving elements- Using computer vision to remove transient objects

Despite these strategies, some obstructions are inevitable.

Automating Object Masking with Python

To streamline my photogrammetry workflow, I developed a Python script that automatically detects and masks people and vehicles in my photogrammetry images. This automation requires only an input directory path and an optional output directory path, significantly reducing the need for manual clean-up and improving the quality of 3D reconstructions. The script uses:

- Computer Vision (OpenCV): Used for reading images and handling image input/output.

- Deep Learning Models (Mask R-CNN): A pre-trained Mask R-CNN model from TorchVision automatically detects unwanted objects. (maskrcnn_resnet50_fpn)

How It Works

Understanding the workflow behind the automation helps illustrate how the script processes images efficiently. Below is a step-by-step breakdown of its key components:

Image Pre-processing. The script loads each image. Then, it uses TorchVision’s built-in transforms—extracted from the pre-trained model’s weights—to resize, normalize, and prepare the image for inference.

Object Detection. The pre-trained Mask R-CNN model runs inference on the pre-processed image. It identifies people and vehicles by generating soft masks for each detected instance.

Mask Generation. For detections that exceed a defined confidence threshold, the script converts the soft masks into binary masks. These individual masks are then combined into a single mask image that highlights all unwanted objects.

Output. The script saves the generated mask image using a naming convention that is compatible with Reality Capture. If no output directory is specified via the CLI, the script defaults to saving the mask files in the same directory as the input images.

Preliminary Results

In initial tests on a dataset of city centre images, the automated masking process has yielded promising results:

- Reduced Manual Clean up: By automating the detection and masking of transient objects, the script eliminates the time-consuming process of manual image editing hundreds of photographs.

- Improved Feature Matching: Masking out moving objects ensures that the photogrammetry software focuses on static, permanent features, leading to better alignment and more accurate tie points across images.

- Cleaner 3D Reconstructions: With unwanted objects removed from the input images, the final 3D models and texture of it exhibit fewer artifacts and a higher level of detail, particularly in dynamic urban environments.

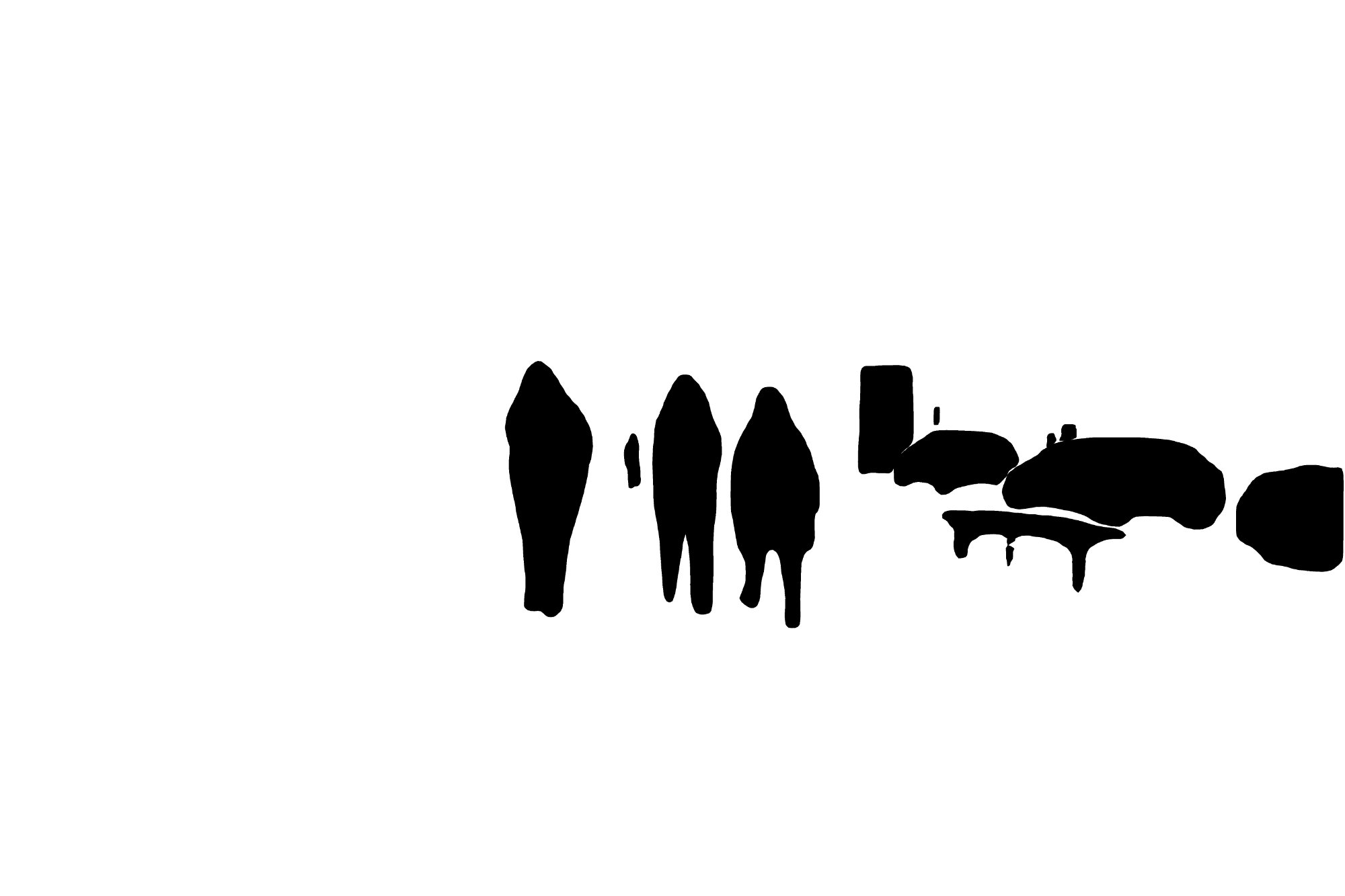

Below is a comparison of a single photograph versus processed versions with masked people and vehicles:

Unfortunately, the masks are not yet optimal and further testing is needed to make them more accurate. However, the script gives a first result that I can work with for now. Maybe a different model or threshold settings will give more accurate masks.

Code

This code snippet was developed with my instructions and refined with assistance from ChatGPT.

import cv2

import torch

import numpy as np

import torchvision

from torchvision.models.detection import maskrcnn_resnet50_fpn, MaskRCNN_ResNet50_FPN_Weights

from PIL import Image

# Set up device and load model with built-in transforms

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

weights = MaskRCNN_ResNet50_FPN_Weights.DEFAULT

model = maskrcnn_resnet50_fpn(weights=weights).to(device)

model.eval()

preprocess = weights.transforms()

def create_mask(image, score_threshold=0.5):

# Preprocess: convert BGR to RGB, then apply the model's transforms

rgb_image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

pil_img = Image.fromarray(rgb_image)

tensor_img = preprocess(pil_img).to(device)

# Run inference

with torch.no_grad():

preds = model([tensor_img])[0]

# Initialize an empty mask

h, w = image.shape[:2]

mask = np.zeros((h, w), dtype=np.uint8)

# Combine masks from detections above the confidence threshold

for i, score in enumerate(preds['scores']):

if score >= score_threshold:

m = (preds['masks'][i, 0] > 0.5).byte().cpu().numpy() * 255

mask = cv2.bitwise_or(mask, m)

return mask

Photogrammetry of the City Centre

Conclusion

Next Steps

This is just the beginning of the Exeter photogrammetry project. Moving forward, I will:

- Expand the data collection to underground passages

- Improve the masking script for better edge detection and context-aware inpainting

- Test different reconstruction techniques for historical visualization

Stay tuned for the next update, where I will delve into capturing Exeter’s underground spaces!

Conclusion

The automated object masking worked well, significantly reducing manual editing and enhancing the overall quality of the 3D reconstructions. Integrating aerial drone data would further improve the process by providing better GPS accuracy and comprehensive street coverage. However, due to restrictions—parts of the city centre and high street are falling within a restricted fly zone near a prison—a drone flight was not feasible. Future work will explore alternative methods to capture high-quality aerial data in such constrained urban environments.